Neural Networks#

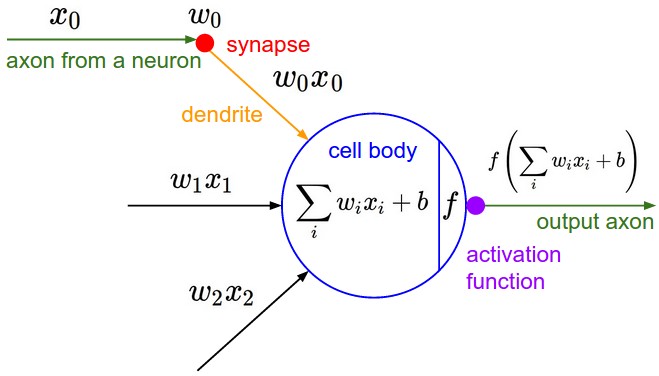

(Artificial) Neural Networks are a mathematical model of the human brain. In the human brain, the neuron is the most basic unit. In neural networks, we model neurons using a perceptron.

When a neuron fires (activates) in the human brain, this may cause other neurons to also fire. Similarly, the perceptron will receive a signal \(x\) from other artificial neurons, and this will have some influence, controlled by a weight \(w\), in the output of the perceptron. If \(w\) is a negative number, then this synapse will have an inhibitory influence in the neuron. Otherwise, if \(w\) is positive, it will have an excitatory influence.

Then, the perceptron “aggregates” the signals using a weighted sum and a bias \(b\), an extra term used to represent the threshold for the artificial neuron to fire.

So far, we’ve actually calculated the equivalent to the level of activity of our artificial neuron. In a biological neuron, once it reaches a certain threshold, a signal is fired. Inspired by human biology, McCulloch and Pitts introduced the following activation function \(f\).

We can then apply it to the activity level of our neuron, which we’ve calculated before (weighted sum and bias), to obtain a simple mathematical model of a human neuron.

There are many other activation functions e.g. RELu, tanh, … each with different strengths and weaknesses.

Note

This section is heavily inspired by the introduction to artificial neurons from the CS231n course at Stanford. If you’re looking to supplement your understanding we recommend taking a look here.